PySpark saveAsTable() method, available in the DataFrameWriter class, offers a convenient way to save the content of a DataFrame or a Dataset as a table in a database. This powerful feature allows for efficient persistence and management of structured data. In this article, we will explore the saveAsTable() method in Spark and understand its usage in saving DataFrames as tables.

Table of Contents

1. What is PySpark saveAsTable?

The saveAsTable() method is a functionality provided by Spark’s DataFrameWriter class. It enables the saving of a DataFrame or a Dataset as a table in a database. This capability is not limited to any specific database but extends to various options like Apache Hive, Apache HBase, or any other JDBC-compliant databases. When using saveAsTable(), Spark creates a new table in the designated database with the provided name, allowing easy access and management of the data.

2. Saving a DataFrame as a Table

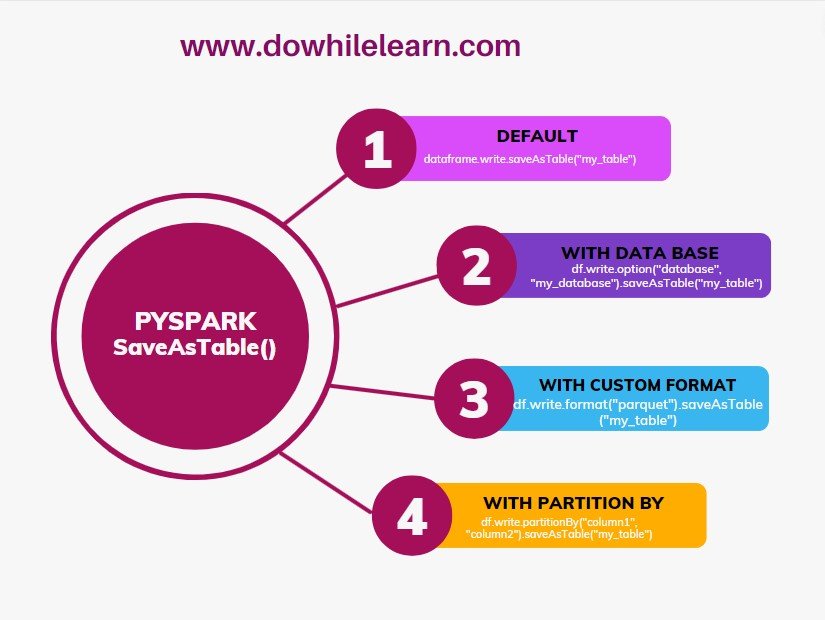

Let’s explore different scenarios where the saveAsTable() the method can be utilized to save DataFrames as tables.

2.1 Default Database

To save the contents of a DataFrame, df, as a table called “my_table” in the default database, the following code snippet can be used:

df.write.saveAsTable("my_table")In this example, Spark automatically infers the schema of the DataFrame and creates the table “my_table” in the default database.

2.2 Specific Database for saveAsTable

To save the contents of a DataFrame, df, as a table called “my_table” in the “my_database” database, you can use the following code:

df.write.option("database", "my_database").saveAsTable("my_table")By specifying the “database” option, the table is created in the desired database instead of the default database.

2.3 Specific Format for saveAsTable

If you want to save the contents of a DataFrame, df, as a table called “my_table” using a specific file format like Parquet, you can use the following code:

df.write.format("parquet").saveAsTable("my_table")By specifying the format using the format() method, the table is saved in the specified format. In this example, the Parquet file format is used.

2.4 Specific Partition Columns for saveAsTable

Partitioning data can be beneficial for organizing and optimizing storage. To save a DataFrame, df, as a table called “my_table” with specific partition columns, you can use the following code:

df.write.partitionBy("column1", "column2").saveAsTable("my_table")By using the partitionBy() method and providing the desired partition column names, the table is saved with the specified partition columns.

2.5 External Table

By default, Spark saves tables as managed tables in the configured metastore. However, if you want to save the DataFrame as an external table, you can specify the storage location using the “path” option. Here’s an example:

df.write.option("path", "/path/to/table").saveAsTable("my_table")By providing

the “path” option with the desired storage location, the DataFrame is saved as an external table.

3. Use Cases of PySpark saveAsTable()

The saveAsTable() a method in Spark offers several use cases and benefits:

- Data Persistence: With pyspark

saveAsTable(), you can persist the data of a DataFrame or a Dataset as a table in a database. This is useful when you want to reuse the data later for further analysis or processing. - Data Sharing: Tables created using

saveAsTable()can be shared across multiple Spark applications or users. This facilitates collaborative data analysis and enables different users to work with the same dataset efficiently. - Table Management:

saveAsTable()provides a straightforward way to manage tables. It allows you to create new tables, overwrite existing ones, or append data to existing ones. - SQL Integration: Spark tables created

saveAsTable()can be seamlessly accessed using Spark SQL. This allows for SQL-like querying and analysis of the data, making it easier to perform complex operations on tables. - Data Lake Integration: By saving DataFrames as tables, Spark can integrate with data lake technologies like Apache Hive or other compatible systems. This integration leverages the metadata management capabilities of these systems and enhances data organization and accessibility.

In this article, we explored the saveAsTable() the method in Spark, which enables saving DataFrames as tables in databases. We covered various examples, including saving tables in default and specific databases, using different file formats, specifying partition columns, and creating external tables. We also discussed the use cases saveAsTable() for data persistence, sharing, table management, SQL integration, and data lake integration. By utilizing saveAsTable(), Spark provides a powerful tool for efficient data management and analytics.

Pingback: The Art of Data Crafting: PySpark Creating an Empty DataFrame - dowhilelearn.com